Robots.txt Generator

About robot.txt generator

- Introduction :

In the digital age, websites play a crucial role in establishing an online presence. However, not all website owners want their entire site indexed by search engines or accessed by web crawlers. This is where the "robots.txt" file comes into play. The robots.txt file, located at the root of a website, provides instructions to web crawlers about which pages to crawl and index. While creating a robots.txt file manually can be daunting, a Robots.txt Generator simplifies the process, enabling website owners to generate this vital file with ease.

- Simplifying Website Indexing and Crawling:

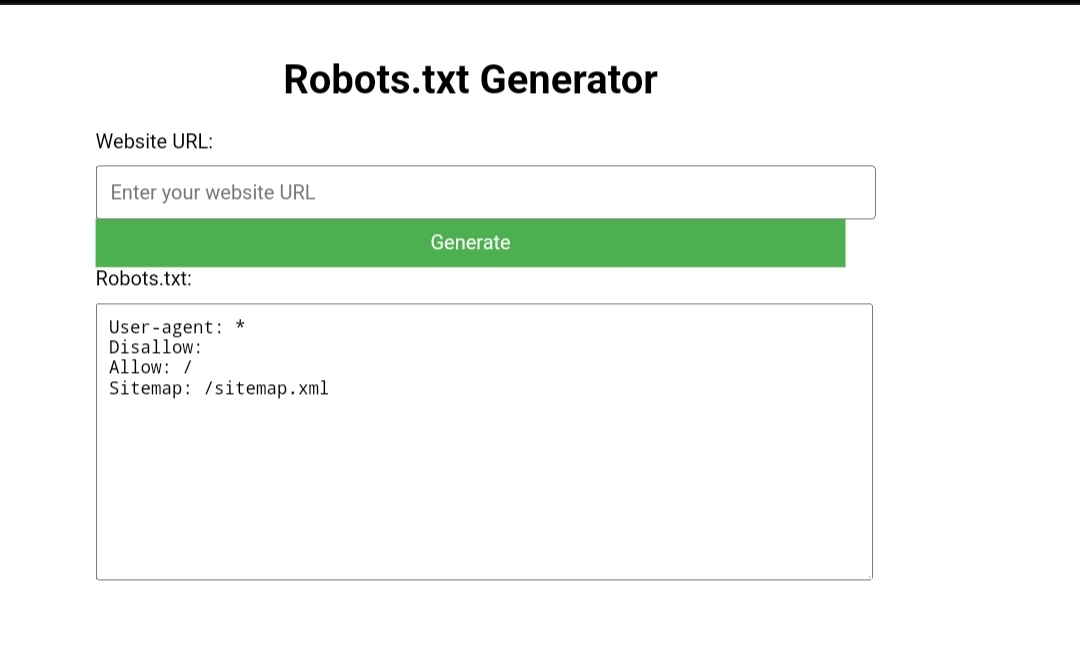

A Robots.txt Generator is a web-based tool designed to assist website owners in creating and customizing their robots.txt file. It eliminates the need to manually write the directives, making it accessible even to those without advanced technical knowledge. With just a few clicks, users can generate a robots.txt file tailored to their specific needs.

The Robots.txt Generator typically includes a user-friendly interface where website owners can input their desired instructions. This may include specifying which parts of the website should be disallowed from crawling, allowing or disallowing specific user agents, and declaring the location of the sitemap. Additionally, advanced options may be available, allowing customization of crawl delay and other specific directives.

- The Benefits of Using a Robots.txt Generator:

Using a Robots.txt Generator offers several benefits for website owners. Firstly, it streamlines the process of creating a robots.txt file, saving time and effort. Instead of manually writing complex directives, website owners can input their preferences through a simple and intuitive interface.

Secondly, a Robots.txt Generator ensures accuracy and correctness in the generated file. It eliminates the risk of human error that may occur when creating the file manually, reducing the chances of accidentally blocking important pages or allowing access to sensitive content.

Furthermore, a Robots.txt Generator enables website owners to customize their directives to suit their specific requirements. Whether it's disallowing access to certain directories, preventing crawling of specific file types, or fine-tuning instructions for particular user agents, the generator provides the flexibility to tailor the robots.txt file accordingly.

- The Ease of Implementation and Accessibility :

Robots.txt Generators are typically web-based tools that require no installation or technical setup. They can be accessed from any web browser, making them highly accessible for website owners across various platforms and devices. Additionally, these generators often provide responsive designs, ensuring a seamless experience on both desktop and mobile devices.

Moreover, Robots.txt Generators are often designed to be user-friendly, with clear instructions and intuitive interfaces. They guide website owners through the process of generating the robots.txt file step by step, ensuring that even novice users can create an effective robots.txt file.

- Conclusion :

In conclusion, a Robots.txt Generator is an invaluable tool for website owners seeking to control and customize their website's indexing and crawling. By simplifying the process of creating a robots.txt file, these generators empower website owners to specify their desired directives effortlessly. With accessibility, ease of implementation, and flexibility, Robots.txt Generators contribute to a website's overall search engine optimization strategy and ensure that search engine crawlers behave according to the website owner's preferences. Embracing this technology can lead to more effective management of website content visibility and improved user experience.

Remember that each website's needs may vary, and consulting with SEO professionals or referring to search engine guidelines can provide additional insights on using robots.txt effectively.

FAQ::::

Q.What is a robots.txt file?

A.A robots.txt file is a text file placed at the root directory of a website to provide instructions to web crawlers or robots about which pages to crawl and index. It helps control how search engines access and interact with the website's content.

Q.Why is a robots.txt file important?

A.A robots.txt file allows website owners to control which parts of their site should be indexed by search engines and which parts should be excluded. It helps prevent sensitive or duplicate content from being indexed and can improve a site's overall SEO (Search Engine Optimization).

Q.What is a Robots.txt Generator?

A.A Robots.txt Generator is an online tool that simplifies the process of creating a robots.txt file. It provides a user-friendly interface where website owners can input their desired instructions and generate the robots.txt file without the need for manual coding.

Q. How does a Robots.txt Generator work?

A. A Robots.txt Generator typically allows users to specify which pages or directories should be disallowed or allowed for crawling by search engine robots. Users can input their preferences, including user agent instructions, crawl delay, and sitemap location, and the generator generates the corresponding robots.txt file.

Q. Can I customize the generated robots.txt file?

A.Yes, most Robots.txt Generators offer customization options. You can specify the directives for disallowing or allowing specific pages, directories, or file types based on your website's structure and requirements. Advanced options may include setting crawl delay or excluding specific user agents.

Q.Is using a Robots.txt Generator necessary?

A.Using a Robots.txt Generator is not mandatory, but it simplifies the process of creating a robots.txt file, especially for those without technical expertise. It ensures accuracy, saves time, and reduces the risk of errors when specifying instructions for search engine robots.

Q. How do I implement the generated robots.txt file on my website?

A. After generating the robots.txt file using a Robots.txt Generator, you need to upload it to the root directory of your website using FTP or file manager provided by your hosting provider. Ensure that the file is named "robots.txt" and accessible to web crawlers.

Q. Can I update or modify the robots.txt file later?

A. Yes, you can update or modify the robots.txt file at any time. If you need to make changes, generate a new robots.txt file using the Robots.txt Generator and replace the existing file on your website's root directory.

Q. Are there any risks associated with using a robots.txt file?

A.While robots.txt files are essential for controlling website indexing, it's crucial to ensure you're not unintentionally blocking access to important pages or sections. Careful consideration and periodic review of your robots.txt file is necessary to avoid any unintended consequences.

Q.Can a robots.txt file completely block search engines from indexing my site?

A.A robots.txt file is a set of instructions for search engine robots, but it's ultimately up to the search engines to honor those instructions. While most well-behaved search engine robots respect the directives, it's not a foolproof method to prevent indexing. Other methods like password protection or meta tags may be necessary for stronger access restrictions.